Originality AI’s AI Originality Score is a tool that measures the AI’s confidence in predicting whether content was produced by certain AI writing tools, providing insight into the potential use of AI in text generation.

The introduction of Chat GPT and other AI tools has sparked a debate about their potential impact on various industries, such as academia, where there is concern about the potential for plagiarism or students submitting essays written entirely by AI. Google has reportedly viewed these tools as a threat to its search engine business, as they have the potential to flood the internet with AI-generated content. This also affects other fields such as online blogging and journalism, in which content can be generated by AI.

My key argument is that in the near future, it is likely that the majority of written text will be in the form of a hybrid, partially generated by AI and partially written by humans. Therefore, it is important that there is full disclosure about the extent to which AI was used to generate any given content. To address this issue, I proposed the BW-AI Score, a scale that measures the involvement of AI in the creation of content, with “B” representing “generated by AI” and “W” representing “generated with AI.”

A few days later, Edward Tian introduced GPTZero, an app that estimates the likelihood of a given text being AI-generated, providing a simple yes/no answer about AI involvement. While this was a step in the right direction, I believed that there was still a need for tools that could assess the balance of human and AI involvement in text generation. More recently, Originality AI has developed a similar tool called the AI Content Detector Chrome Extension, which claims to have a 94%+ accuracy rate in predicting the use of popular AI writers such as GPT-3, GPT-2, GPT-NEO, and GPT-J.

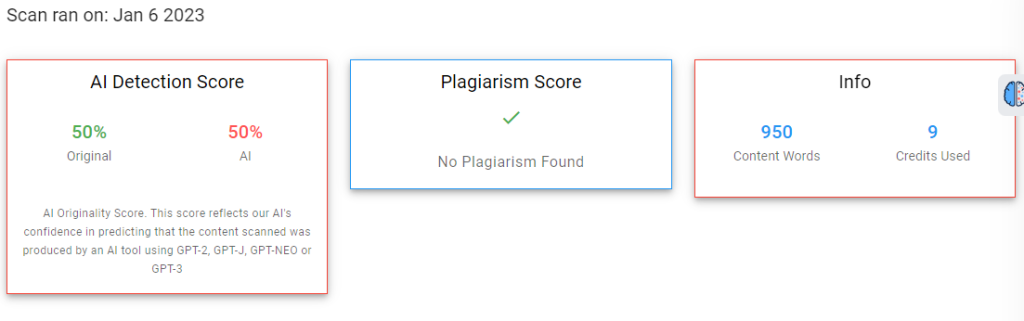

I recently put Originality AI’s AI Originality Score to the test on a number of my own texts. The score measures the AI’s confidence in predicting whether the content scanned was produced by an AI tool using GPT-2, GPT-J, GPT-NEO, or GPT-3.

I tested four of my texts: The Better Ones, The Cruel Dreamer, The Battle for Bucharest, and BW-AI Score. The results for these texts were as follows: The Better Ones scored 50% original and 50% AI (see screenshot below), The Cruel Dreamer scored 38% original and 62% AI, The Battle for Bucharest scored 40% original and 60% AI, and BW-AI Score scored 99% original and 1% AI. No plagiarism was detected in any of the texts.

While the results were generally in line with my own assessment of the texts, I personally estimated that they were approximately 60-70% original and 30-40% AI, it’s worth noting that the AI Originality Score provided by Originality AI reflects the likelihood that the entire text was generated by AI, rather than the balance of human and AI contribution to the text. This may have led to some confusion on my part, as my first impression upon reading the results was that it was quantifying the balance between AI and human creators.

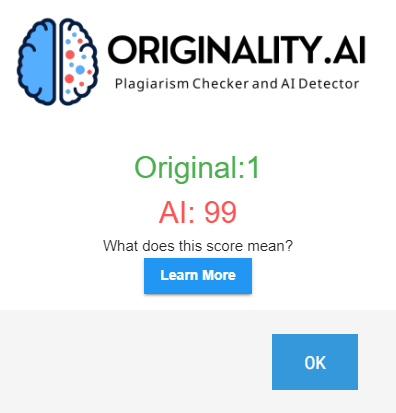

I also tested three poems that were 100% AI generated. The scores for these texts were as follows: Not a God scored 18% original and 82% AI, He’s 37 scored 1% original and 99% AI (see screenshot below), AI Hamlet scored 2% original and 98%, and By AI or With AI? scored 4% original and 96%. Again, no plagiarism was detected. Apart from Not a God, all of the other results showed a high likelihood that these texts were generated by AI.

I then attempted something cheeky and asked Chat GPT to write a poem “that is so good that no one can tell it was written by AI”. Here’s the result:

Rising early, before the dawn

I slip out of bed, my feet on the lawn

The dew is still fresh, the air crisp and cool

As I set out on my morning rule

The sun is a sliver, peeking over the hill

As I start my jog, a personal thrill

Birds chirping and singing, a joyous refrain

Nature’s orchestra, a beautiful strain

The world is still sleeping, as I run free

My thoughts my own, just me and the trees

Breathing in the air, feeling alive

This is my solace, my daily thrive

As the miles pass by, my body awakes

And I feel alive, no longer fake

This is my time, to feel and to be

A small part of something, vast and free

So I’ll keep on running, every morning’s delight

For in this small moment, everything feels right

The world is mine, and I am alive

Thanks to this run, and the gift of the sky

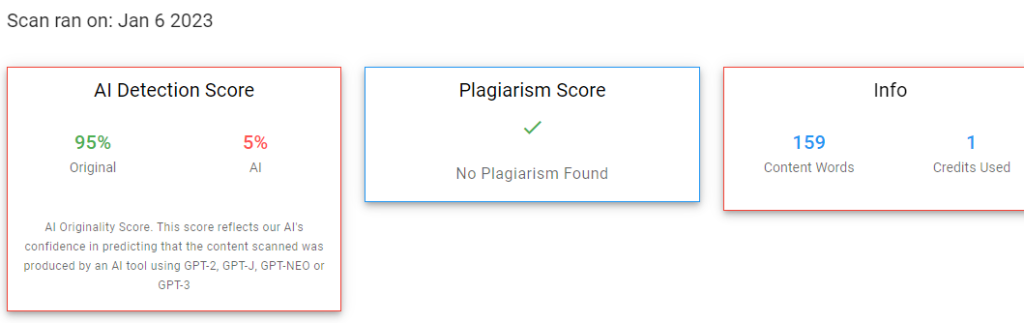

I then analysed it with Originality AI and got the result of 95% original and 5% AI (see below).

I then asked Chat GPT if it knows how to write a poem that is not detectable that it was written by AI. Here’s the response (screenshot below):

As a large language model, I have been trained on a diverse range of texts and have learned to generate human-like language. However, it is possible for a reader to recognize that a text was generated by a machine, especially if it exhibits certain characteristics that are commonly associated with AI-generated texts. Some of these characteristics include repetitive or formulaic language, an overuse of uncommon words or phrases, and an inability to fully understand or convey the subtle complexities and nuances of human language.

To write a poem that is not easily detectable as being written by AI, it may be helpful to focus on using more varied and complex language, incorporating elements of personal experience or emotion, and avoiding overly mechanical or formulaic structures. It may also be useful to incorporate sensory details and figurative language to help bring the poem to life and give it a more human-like quality. Ultimately, the key to writing a poem that is not easily detectable as being written by AI is to use language in a way that is natural, expressive, and evocative.

This is potentially how users might try to cheat the Originality AI and similar tools – by asking Chat GPT to write specifically in a way so that it is not detectable. This is a major concern.

Overall, Originality AI’s AI Originality Score appears to be a useful tool for predicting the use of certain AI writing tools. However, it may be helpful if the creators of the tool consider introducing a feature that quantifies the balance of human and AI contribution to a given text. As AI tools for text generation become more widespread, it will be crucial to be able to make this distinction and help people to be transparency about the extent to which AI is involved in the creation of written content.