As AI chatbots like ChatGPT become increasingly popular and potentially integrated into word processing programs like Microsoft Office and Google Docs, it is likely that the majority of written text will be in the form of a hybrid – partially generated by AI and partially written by humans.

In this context, the more pressing issue will be to quantify the extent of AI involvement in text generation rather than simply determining whether it was involved or not.

Recently, Edward Tian introduced the concept of GPTZero, an app that estimates the likelihood of a given text being AI-generated. Currently, the app is able to answer a simple yes/no question about AI involvement, but it is likely that in the future it will be able to quantify this likelihood using percentages. Tian’s approach differs from the one I proposed with the BW-AI Score in that it addresses the issue of plagiarism and individuals not admitting to using AI to produce an essay or article. In contrast, the proposed BW-AI Score assumes that individuals are willing to disclose the extent of AI involvement in text generation, and provides a score to help them describe this involvement.

If AI chatbots become as popular as Google in the future, it is likely that the majority of people writing anything will be using them. If chatbots are incorporated into word processing programs, the resulting text will likely be a hybrid of human and AI-generated content. In this context, it will be important to have a way to quantify the extent of AI involvement in order to accurately evaluate the content being produced. Tools such as GPTZero can be used to detect the likelihood of a text being AI-generated, but we will also need tools to assess the balance of human and AI involvement in text generation.

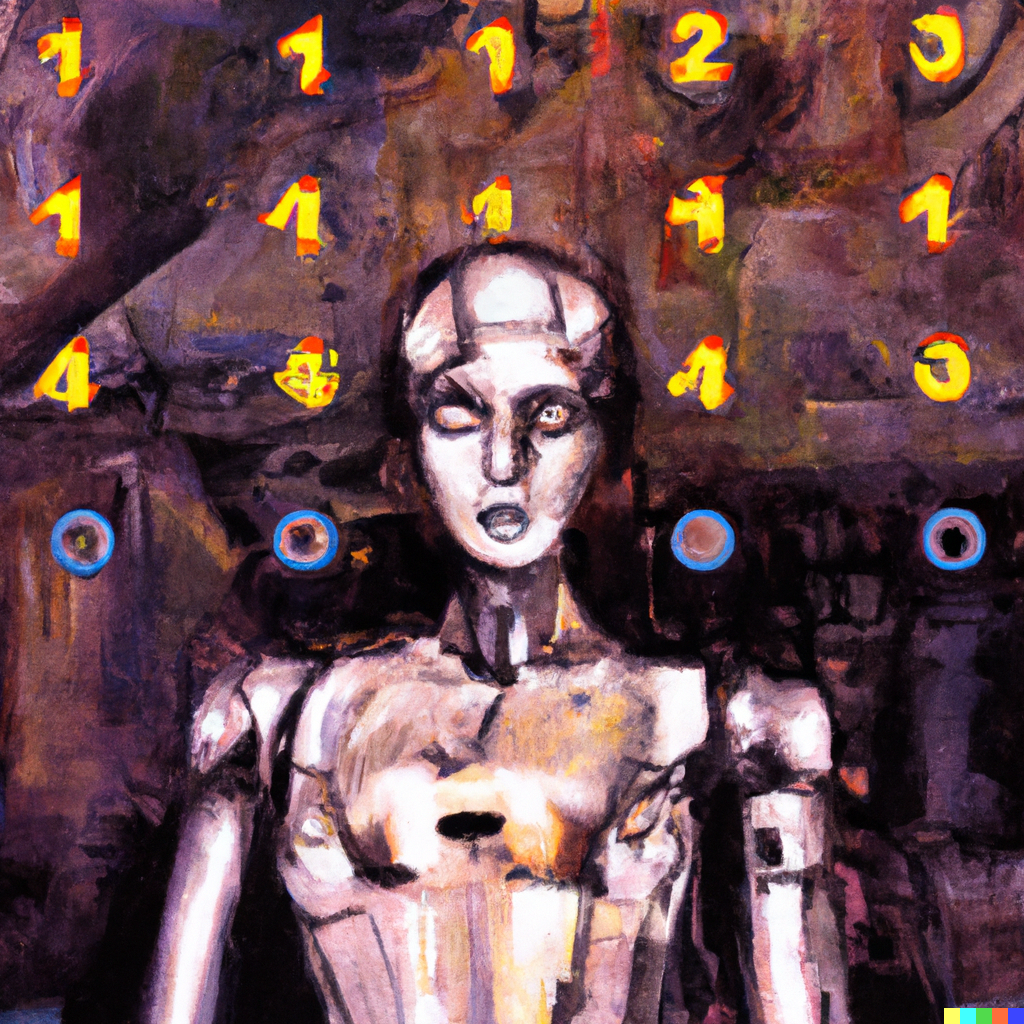

Text BW-AI Score: 2 | Image BW-AI Score: 10